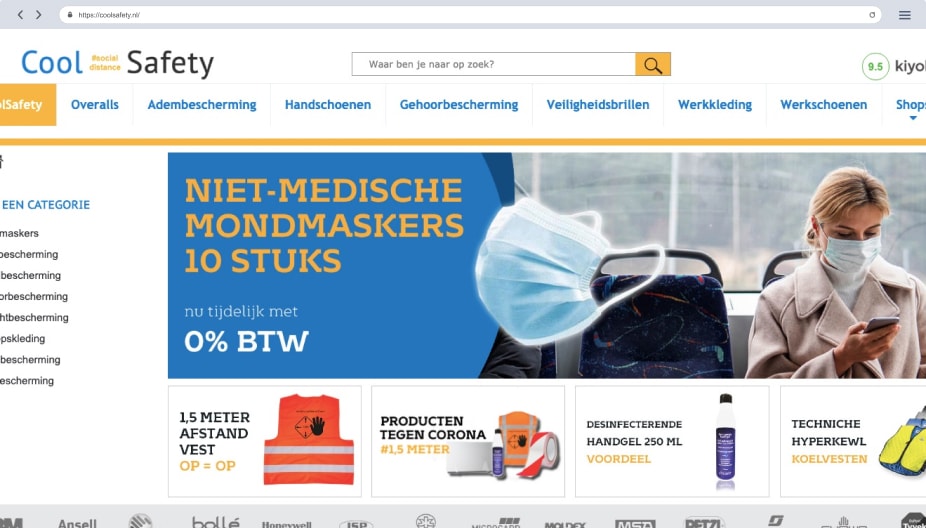

About the Client

CoolSafety.nl is a purely online retailer from the Netherlands selling protection equipment like gloves, helmets, and dust masks. They’re in the market for about 15 years with a young yet experienced team.

CoolSafety.nl is a meta store for all different sub-stores. It has different subdomains for different verticals in the protection market. If you’d like to buy protection for “your face”, you must visit one of their stores.

Including all their domains, they’re getting 150.000 visitors per month. Their average basket size is about 85 Euros.

Rosa Starmans, project manager from CoolSafety explained the details of their story with Prisync. She said “implementing a price comparison tool to the company” was one of the most important tasks she handled successfully.

Before using Prisync

Regarding tracking competitor prices, Rosa said:

“It was an impossible task for us to complete before Prisync because of the manual work to track our competitors’ prices properly and continuously”.

Sounds familiar, doesn’t it?

We were able to track 25 products before Prisync and these were mostly selected from our top-selling products. This project wasn’t that structured and continuous, we were just checking the prices from time to time.

Why Prisync?

We asked Rosa the reasons why she chose to use Prisync as the competitor price tracking tool to go with. She replied:

- Prisync is really easy to use, no complicated interfaces. You can easily manage all functions yourself.

- Being able to track an unlimited number of competitors is just awesome and this is really a unique proposition among the alternatives.

- I also like the company culture of Prisync, the team is always ready to help with any question or the problem you have and they really help you use the system at the best possible level.

After using Prisync?

Rosa shared a lot of great results with us. Let’s check them out:

With the data Prisync provides, we’re able to react to the market changes faster as we’re now tracking our competitors’ prices in a structured and continuous way.

Rosa Starmans / Project Manager at CoolSafety

According to Rosa, they’re checking all price changes daily and take actions if needed.

Rosa shared with us that they have lowered a specific product’s price 15 Euros as it was standing a bit higher from the market and with this simple action, they’ve got 2500 Euros more turnover than the previous month for that specific product.

“Before Prisync, we were just able to track about 25 products with a few competitors but now, we are tracking more than 500 products in almost 500 different websites, thanks to the automation Prisync has.”

Future Plans

CoolSafety is using Prisync to track the prices from different markets they don’t sell in yet get insights on so that they can set up their first pricing structure accordingly.

Leave a Reply